Minimum Viable Kubernetes

If you're reading this, chances are good that you've heard of Kubernetes. (If you haven't, how exactly did you end up here?) But what actually is Kubernetes? Is it "Production-Grade Container Orchestration"? Is it a "Cloud-Native Operating System"? What do either of those phrases even mean?

To be completely honest, I'm not always 100% sure. But I think it's interesting and informative to take a peek under the hood and see what Kubernetes actually does under the many layers of abstraction and indirection. So just for fun, let's see what the absolute bare minimum "Kubernetes cluster" actually looks like. (It's going to be a lot more minimal than setting up Kubernetes the hard way.)

I'm going to assume a basic familiarity with Kubernetes, Linux, and containers, but nothing too advanced. By the way, this is all for learning/exploration purposes, so don't run any of it in production!

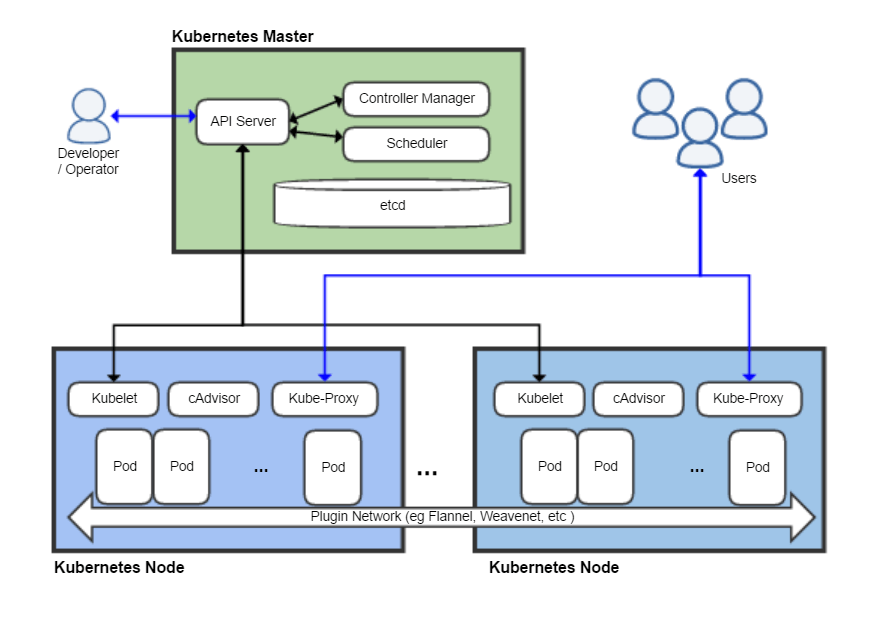

Big Picture View

Kubernetes has a lot of components and it's sometimes a bit difficult to keep track of all of them. Here's what the overall architecture looks like according to Wikipedia:

There are at least eight components listed in that diagram; we're going to be ignoring most of them. I'm going to make the claim that the minimal thing you could reasonably call Kubernetes consists of three essential components:

- kubelet

- kube-apiserver (which depends on etcd as its database)

- A container runtime (Docker in this case)

Let's take a closer look at what each of these do, according to the docs. First, kubelet:

An agent that runs on each node in the cluster. It makes sure that containers are running in a Pod.

That sounds simple enough. What about the container runtime?

The container runtime is the software that is responsible for running containers.

Tremendously informative. But if you're familiar with Docker, than you should have a basic idea of what it does. (The details of the separation of concerns between the container runtime and kubelet are actually a bit subtle, but I won't be digging into them here.)

And the API server?

The API server is a component of the Kubernetes control plane that exposes the Kubernetes API. The API server is the front end for the Kubernetes control plane.

Anyone who's ever done anything with Kubernetes has interacted with the API, either directly or through kubectl. It's the core of what makes Kubernetes Kubernetes, the brain that turns the mountains of YAML we all know and love (?) into running infrastructure. It seems obvious that we'll want to get it running for our minimal setup.

Prerequisites If You Want to Follow Along

- A Linux virtual or corporeal machine you're OK messing around with as root (I'm using Ubuntu 18.04 on a VM).

- That's it!

The Boring Setup

The machine we're using needs Docker installed. (I'm not going to dig too much

into how Docker and containers work; there are some amazing rabbit holes already

out there if you're interested.) Let's just install it using apt:

$ sudo apt install docker.io

$ sudo systemctl start dockerNext we'll need to get the Kubernetes binaries. We actually only need kubelet to bootstrap our "cluster", since we can use kubelet to run the other server components. We'll also grab kubectl to interact with our cluster once it's up and running.

$ curl -L https://dl.k8s.io/v1.18.5/kubernetes-server-linux-amd64.tar.gz > server.tar.gz

$ tar xzvf server.tar.gz

$ cp kubernetes/server/bin/kubelet .

$ cp kubernetes/server/bin/kubectl .

$ ./kubelet --version

Kubernetes v1.18.5Off To The Races

What happens when we try to run kubelet?

$ ./kubelet

F0609 04:03:29.105194 4583 server.go:254] mkdir /var/lib/kubelet: permission deniedkubelet needs to run as root; fair enough, since it's tasked with managing the entire node. Let's see what the CLI options look like:

$ ./kubelet -h

<far too much output to copy here>

$ ./kubelet -h | wc -l

284Holy cow, that's a lot of options! Thankfully we'll only need a couple of them for our setup. Here's an option that looks kind of interesting:

--pod-manifest-pathstringPath to the directory containing static pod files to run, or the path to a single static pod file. Files starting with dots will be ignored. (DEPRECATED: This parameter should be set via the config file specified by the Kubelet's –config flag. See https://kubernetes.io/docs/tasks/administer-cluster/kubelet-config-file/ for more information.)

This option allows us to run static pods, which are pods that aren't managed through the Kubernetes API. Static pods aren't that common in day-to-day Kubernetes usage but are very useful for bootstrapping clusters, which is exactly what we're trying to do here. We're going to ignore the loud deprecation warning (again, don't run this in prod!) and see if we can run a pod.

First we'll make a static pod directory and run kubelet:

$ mkdir pods

$ sudo ./kubelet --pod-manifest-path=podsThen, in another terminal/tmux window/whatever, we'll make a pod manifest:

$ cat <<EOF > pods/hello.yaml

apiVersion: v1

kind: Pod

metadata:

name: hello

spec:

containers:

- image: busybox

name: hello

command: ["echo", "hello world!"]

EOFkubelet starts spitting out some warnings; other than that it anti-climatically appears that nothing really happened. But not so! Let's check Docker:

$ sudo docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8c8a35e26663 busybox "echo 'hello world!'" 36 seconds ago Exited (0) 36 seconds ago k8s_hello_hello-mink8s_default_ab61ef0307c6e0dee2ab05dc1ff94812_4

68f670c3c85f k8s.gcr.io/pause:3.2 "/pause" 2 minutes ago Up 2 minutes k8s_POD_hello-mink8s_default_ab61ef0307c6e0dee2ab05dc1ff94812_0

$ sudo docker logs k8s_hello_hello-mink8s_default_ab61ef0307c6e0dee2ab05dc1ff94812_4

hello world!kubelet read the pod manifest and instructed Docker to start a couple of

containers according to our specification. (If you're wondering about that

"pause" container, it's Kubernetes hackery that's used to reap zombie processes

—see this blog post for the gory details.) kubelet will run our busybox

container with our command and restart it ad infinitum until the static pod is

removed.

Let's congratulate ourselves: we've just figured out one of the world's most convoluted ways of printing out text to the terminal!

Getting etcd running

Our eventual goal is to run the Kubernetes API, but in order to do that we'll

need etcd running first. A static pod ought to fit the bill. Let's run a minimal

etcd cluster by putting the following in a file in the pods directory

(e.g. pods/etcd.yaml):

apiVersion: v1

kind: Pod

metadata:

name: etcd

namespace: kube-system

spec:

containers:

- name: etcd

command:

- etcd

- --data-dir=/var/lib/etcd

image: k8s.gcr.io/etcd:3.4.3-0

volumeMounts:

- mountPath: /var/lib/etcd

name: etcd-data

hostNetwork: true

volumes:

- hostPath:

path: /var/lib/etcd

type: DirectoryOrCreate

name: etcd-dataIf you've ever worked with Kubernetes, this kind of YAML file should look familiar. There are only two slightly unusual things worth noting:

- We mounted the host's

/var/lib/etcdto the pod so that the etcd data will survive restarts (if we didn't do this the cluster state would get wiped every time the pod restarted, which would be a drag even for a minimal Kubernetes setup). - We set

hostNetwork: truewhich, unsurprisingly, sets up the etcd pod to use the host network instead of the pod-internal network (this will make it easier for the API server to find the etcd cluster).

Some quick sanity checks show that etcd is indeed listening on localhost and writing to disk:

$ curl localhost:2379/version

{"etcdserver":"3.4.3","etcdcluster":"3.4.0"}

$ sudo tree /var/lib/etcd/

/var/lib/etcd/

└── member

├── snap

│ └── db

└── wal

├── 0.tmp

└── 0000000000000000-0000000000000000.walRunning the API server

Getting the Kubernetes API server running is even easier. The only CLI flag we

have to pass is --etcd-servers, which does what you'd expect:

apiVersion: v1

kind: Pod

metadata:

name: kube-apiserver

namespace: kube-system

spec:

containers:

- name: kube-apiserver

command:

- kube-apiserver

- --etcd-servers=http://127.0.0.1:2379

image: k8s.gcr.io/kube-apiserver:v1.18.5

hostNetwork: truePut that YAML file in the pods directory and the API server will start. Some

quick curl ing shows that the Kubernetes API is listening on port 8080 with

completely open access—no authentication necessary!

$ curl localhost:8080/healthz

ok

$ curl localhost:8080/api/v1/pods

{

"kind": "PodList",

"apiVersion": "v1",

"metadata": {

"selfLink": "/api/v1/pods",

"resourceVersion": "59"

},

"items": []

}(Again, don't run this setup in production! I was a bit surprised that the default setup is so insecure, but I assume it's to make development and testing easier.)

And, as a nice surprise, kubectl works out of the box with no extra configuration!

$ ./kubectl version

Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.5", GitCommit:"e6503f8d8f769ace2f338794c914a96fc335df0f", GitTreeState:"clean", BuildDate:"2020-06-26T03:47:41Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.5", GitCommit:"e6503f8d8f769ace2f338794c914a96fc335df0f", GitTreeState:"clean", BuildDate:"2020-06-26T03:39:24Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

$ ./kubectl get pod

No resources found in default namespace.Easy, right?

A Problem

But digging a bit deeper, something seems amiss:

$ ./kubectl get pod -n kube-system

No resources found in kube-system namespace.Those static pods we set up earlier are missing! In fact, our kubelet-running node isn't showing up at all:

$ ./kubectl get nodes

No resources found in default namespace.What's the issue? Well, if you remember from a few paragraphs past, we're running kubelet with an extremely basic set of CLI flags, so kubelet doesn't know how to communicate with the API server and update it on its status. With some perusing through the CLI documentation, we find the relevant flag:

--kubeconfigstringPath to a kubeconfig file, specifying how to connect to the API server. Providing –kubeconfig enables API server mode, omitting –kubeconfig enables standalone mode.

This whole time we've been running kubelet in "standalone mode" without knowing it. (If we were being pedantic we might have considered standalone kubelet to be the "minimum viable Kubernetes setup", but that would have made for a boring blog post.) To get the "real" setup working we need to pass a kubeconfig file to kubelet so that it knows how to talk to the API server. Thankfully that's pretty easy (since we have no authentication or certificates to worry about):

apiVersion: v1

kind: Config

clusters:

- cluster:

server: http://127.0.0.1:8080

name: mink8s

contexts:

- context:

cluster: mink8s

name: mink8s

current-context: mink8sSave that as kubeconfig.yaml, kill the kubelet process, and restart with the

necessary CLI flag:

$ sudo ./kubelet --pod-manifest-path=pods --kubeconfig=kubeconfig.yaml(As an aside, if you try curl ing the API while kubelet is dead, you'll find

that it still works! Kubelet isn't a "parent" of its pods in the way that Docker

is, it's more like a "management daemon". kubelet-managed containers will keep

running indefinitely until kubelet stops them.)

After a few minutes, kubectl should show us the pods and nodes as expected:

$ ./kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default hello-mink8s 0/1 CrashLoopBackOff 261 21h

kube-system etcd-mink8s 1/1 Running 0 21h

kube-system kube-apiserver-mink8s 1/1 Running 0 21h

$ ./kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

mink8s Ready <none> 21h v1.18.5 10.70.10.228 <none> Ubuntu 18.04.4 LTS 4.15.0-109-generic docker://19.3.6Let's congratulate ourselves for real this time (I know I did at this point)—we have an extremely minimal Kubernetes "cluster" running with a fully-functioning API!

Getting a Pod Running

Now let's see what the API can do. We'll start with the old standby, an nginx pod:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- image: nginx

name: nginxWhen we try to apply it, we find a rather curious error:

$ ./kubectl apply -f nginx.yaml

Error from server (Forbidden): error when creating "nginx.yaml": pods "nginx" is

forbidden: error looking up service account default/default: serviceaccount

"default" not found

$ ./kubectl get serviceaccounts

No resources found in default namespace.This is our first indication of how woefully incomplete our Kubernetes environment is—there are no service accounts for our pods to use. Let's try again by making a default service account manually and see what happens:

$ cat <<EOS | ./kubectl apply -f -

apiVersion: v1

kind: ServiceAccount

metadata:

name: default

namespace: default

EOS

serviceaccount/default created

$ ./kubectl apply -f nginx.yaml

Error from server (ServerTimeout): error when creating "nginx.yaml": No API

token found for service account "default", retry after the token is

automatically created and added to the service accountEven when we make the service account manually, the authentication token never gets created. As we continue using our minimal "cluster", we'll find that most of the useful things that normally happen automatically will be missing. The Kubernetes API server is pretty minimal; most of the heavy automatic lifting happens in various controllers and background jobs that aren't running yet.

We can sidestep this particular issue by setting the

automountServiceAccountToken option on the service account (since we won't be

needing to use the service account anyways):

$ cat <<EOS | ./kubectl apply -f -

apiVersion: v1

kind: ServiceAccount

metadata:

name: default

namespace: default

automountServiceAccountToken: false

EOS

serviceaccount/default configured

$ ./kubectl apply -f nginx.yaml

pod/nginx created

$ ./kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 0/1 Pending 0 13mFinally, the pod shows up! But it won't actually start since we're missing the scheduler, another essential Kubernetes component. Again, we see that the Kubernetes API is surprisingly "dumb"—when you create a pod in the API, it registers its existence but doesn't try to figure out which node to run it on.

But we don't actually need the scheduler to get pods running. We can just add

the node manually to the manifest with the nodeName option:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- image: nginx

name: nginx

nodeName: mink8s(You'll replace mink8s with whatever the node is called.) After deleting the

old pod and reapplying, we see that nginx will start up and listen on an

internal IP address:

$ ./kubectl delete pod nginx

pod "nginx" deleted

$ ./kubectl apply -f nginx.yaml

pod/nginx created

$ ./kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 30s 172.17.0.2 mink8s <none> <none>

$ curl -s 172.17.0.2 | head -4

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>To confirm that pod-to-pod networking is working correctly, we can run curl from a different pod:

$ cat <<EOS | ./kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: curl

spec:

containers:

- image: curlimages/curl

name: curl

command: ["curl", "172.17.0.2"]

nodeName: mink8s

EOS

pod/curl created

$ ./kubectl logs curl | head -6

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>It's fun to poke around this environment to see what works and what doesn't. I found that ConfigMaps and Secrets work as expected, but Services and Deployments are pretty much a no-go for now.

SUCCESS!

This post is getting a bit long, so I'm going to declare victory and arbitrarily state that this is the minimum viable setup that could reasonable be called "Kubernetes". To recap: with 4 binaries, 5 CLI flags, and a "mere" 45 lines of YAML (not much by Kubernetes standards), we have a fair number of things working:

- Pods can be managed using the normal Kubernetes API (with a few hacks)

- Public container images can be pulled and managed

- Pods are kept alive and automatically restarted

- Pod-to-pod networking within a single node works pretty much fine

- ConfigMaps, Secrets, and basic volume mounts work as expected

But most of what makes Kubernetes truly useful is still missing, for example:

- Pod scheduling

- Authentication/authorization

- Multiple nodes

- Networking through Services

- Cluster-internal DNS

- Controllers for service accounts, deployments, cloud provider integration, and most of the other "goodies" that Kubernetes brings

So what have we actually set up? The Kubernetes API running by itself is really just a platform for automating containers. It doesn't do much fancy—that's the job of the various controllers and operators that use the API—but it does provide a consistent basis for automation. (In future posts I might dig into the details of some of the other Kubernetes components.)